Shield AI's Autonomous Fighter Jet: What It Is and Why It's Already a Problem

So, Shield AI just pulled the silk cloth off its new autonomous fighter jet, the X-BAT. And offcourse, the tech and military worlds are losing their collective minds. They unveiled it in D.C. to a room full of suits and brass, promising a future where pilotless jets leap vertically from container ships in the middle of the Pacific to go fight our wars. It’s powered by “Hivemind,” the same AI brain that supposedly flew an F-16 in a dogfight against a human pilot (A new autonomous fighter jet just broke cover. It's powered by the same AI brain that flew an F-16 through a dogfight.).

They’re selling a revolution. A clean, efficient, push-button conflict where “attritable” assets—a bloodless corporate term for disposable planes—can be sacrificed without shedding a tear or a headline about a fallen pilot. The Air Force Secretary even took a joyride in the AI-piloted F-16 and called it a “transformational moment.”

It all sounds incredible. It sounds like the future we see in movies. And I’m sure the PowerPoint presentation was spectacular. But every time I hear these slick pitches from a defense tech unicorn, I can’t help but feel like I’m watching a Fyre Festival promo video for World War III. They’re selling a luxury experience on a private island, but what you’re actually going to get is a disaster in a muddy field with a cheese sandwich.

The Reality Check from the Front Lines

Meanwhile, back on planet Earth, there’s a real war happening in Ukraine, and it’s the biggest drone testing ground in history. If this AI revolution were real, you’d think this is where we’d see it. But what’s actually happening there?

Well, it’s a lot less Top Gun and a lot more… duct tape and open-source software.

As detailed in a Kyiv Independent report on AI drones in Ukraine — this is where we're at, developers are clear: full autonomy is a pipe dream. They’re using AI, sure, but it’s mostly for what they call “last-mile targeting.” A human pilot flies a cheap FPV drone, clicks on a tank on his screen, and the drone’s simple machine vision tries to stay locked on that target even if the signal cuts out. This is a huge step up, no doubt, but let’s be real. This isn't a technological revolution; my DSLR camera has had predictive focus tracking for a decade. We’re not talking about Skynet here. We’re talking about a slightly smarter version of a guided missile that costs a few hundred bucks.

One developer, Andriy Chulyk, put it perfectly: “Tesla, for example, having enormous, colossal resources, has been working on self-driving for ten years and — unfortunately — they still haven’t made a product that a person can be sure of.”

He’s right. We can’t get a Tesla to reliably navigate a suburban street without phantom braking, yet we’re supposed to believe a drone can make life-or-death decisions in the chaos of a battlefield filled with electronic warfare, smoke, and moral ambiguity? What happens when the AI has to distinguish between a surrendering soldier and one reaching for a weapon? Or a civilian car and a military transport? Kate Bondar, a senior fellow at CSIS, says the tech can’t even reliably tell a Russian soldier from a Ukrainian one. The data these systems are trained on comes from cheap, analog cameras, not the high-res military-grade sensors on a million-dollar V-BAT drone.

This whole thing feels like Silicon Valley is trying to ship a buggy beta version of a video game to the front lines and call it the future. The gap between the sales pitch in Washington and the grim reality in a trench near Kharkiv is staggering. It’s the difference between a glossy product render and the actual device that arrives with a cracked screen and a battery that dies in an hour.

The Marketing Machine Needs a Buzzword

Let’s be honest about what’s driving this. It’s not just about military advantage; it’s about money. War is a business, and as Bondar points out, “to be competitive you have to have an advantage. To have AI-enabled software… that’s something that sounds really cool and sexy.”

“AI” is the new “blockchain.” It’s the magic dust you sprinkle on your pitch deck to get venture capitalists and Pentagon officials to open their wallets. Shield AI talks about its Hivemind AI winning a dogfight, but the military never actually said who won that test. Was it a real, no-holds-barred fight, or a carefully controlled experiment designed to produce a positive headline? Are we supposed to just take their word for it? I’ve seen enough corporate demos to know that a “successful test” can mean anything.

This isn’t to say the tech is useless. It’s not. Smarter interceptor drones to take down Shaheds, or FPVs that can hold a target through tree cover—that’s genuinely useful stuff that saves lives. Yaroslav Azhnyuk’s work boosting hit rates from 20% to 80% is a massive deal for the soldiers on the ground. That’s a real, tangible improvement.

But that’s an evolution, not a revolution. It’s a supportive tool, not a replacement for human judgment. And that’s the part of the story that gets buried under the headlines about robot fighter jets. This is a bad idea. No, ‘bad’ doesn’t cover it—this is a terrifyingly reckless sprint into a future nobody has thought through. We’re so obsessed with the can we that no one in a position of power seems to be asking should we.

The whole push for autonomy is based on a flawed premise: that you can remove the fallible, emotional human from the loop. But what are you replacing them with? A complex algorithm running on a tiny computer that’s been trained on flawed data and has no concept of ethics, context, or consequence. And honestly, I’m not sure that’s an upgrade.

So, We're Just Doing This, Huh?

At the end of the day, all the talk about "transformational moments" and "reshaping conflict" feels hollow. We're building machines designed to make killing more efficient, more distant, and more palatable by wrapping it in the sleek, sterile language of a tech startup. We’re automating the decision to end a human life and handing the keys to a black box. And we’re doing it not because it’s strategically wise or morally sound, but because the technology is available and the contracts are lucrative. We’ve just decided to accept this, and I can’t shake the feeling that we’re going to regret it.

-

The Business of Plasma Donation: How the Process Works and Who the Key Players Are

Theterm"plasma"suffersfromas...

-

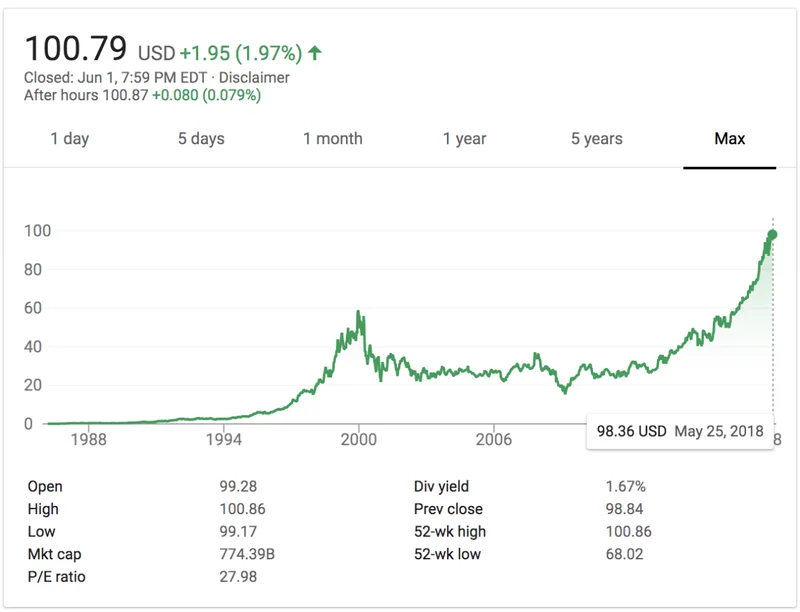

ASML's Future: The 2026 Vision and Why Its Next Breakthrough Changes Everything

ASMLIsn'tJustaStock,It'sthe...

-

Morgan Stanley's Q3 Earnings Beat: What This Signals for Tech & the Future of Investing

It’seasytoglanceataheadline...

-

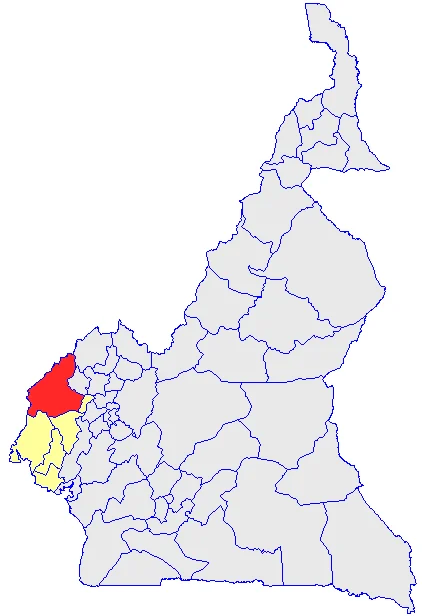

The Manyu Phenomenon: What Connects the Viral Crypto, the Tennis Star, and the Anime Legend

It’seasytodismisssportsasmer...

-

The Nebius AI Breakthrough: Why This Changes Everything

It’snotoftenthatatypo—oratl...

- Search

- Recently Published

-

- Misleading Billions: The Truth About DeFi TVL - DeFi Reacts

- Secure Crypto: Unlock True Ownership - Reddit's HODL Guide

- Altcoin Season is "here.": What's *really* fueling the latest altcoin hype (and who benefits)?

- The Week's Pivotal Blockchain Moments: Unpacking the breakthroughs and their visionary future

- DeFi Token Performance Post-October Crash: What *actual* investors are doing and the brutal 2025 forecast

- Monad: A Deep Dive into its Potential and Price Trajectory – What Reddit is Saying

- MSTR Stock: Is This Bitcoin's Future on the Public Market?

- OpenAI News Today: Their 'Breakthroughs' vs. The Broken Reality

- Medicare 2026 Premium Surge: What We Know and Why It Matters

- Accenture: What it *really* is, its stock, and the AI game – What Reddit is Saying

- Tag list

-

- Blockchain (11)

- Decentralization (5)

- Smart Contracts (4)

- Cryptocurrency (26)

- DeFi (5)

- Bitcoin (30)

- Trump (5)

- Ethereum (8)

- Pudgy Penguins (5)

- NFT (5)

- Solana (5)

- cryptocurrency (6)

- XRP (3)

- Airdrop (3)

- MicroStrategy (3)

- Plasma (4)

- Zcash (7)

- Aster (10)

- qs stock (3)

- mstr stock (3)

- asts stock (3)

- investment advisor (4)

- morgan stanley (3)

- ChainOpera AI (4)

- federal reserve news today (4)