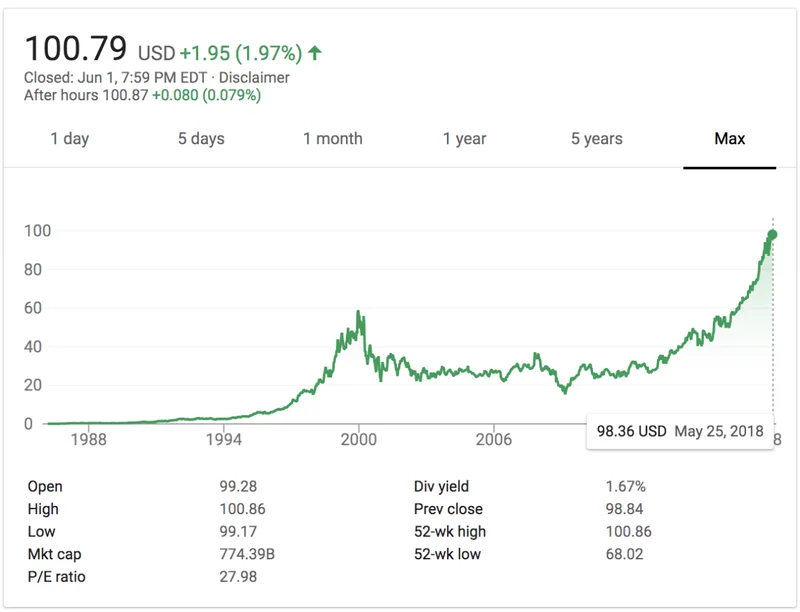

Broadcom's New Valuation: An Analyst's Breakdown of the Post-Earnings Math

The Illusion of Control: Deconstructing the Web's Coercive Cookie Contract

We’ve all seen it. You attempt to access a piece of information—an article, a data set, a simple news clip—and are met not with the content you sought, but with a sterile, accusatory message: “Access to this page has been denied.” The reason? “We believe you are using automation tools to browse the website.” The proposed fix is always the same: ensure JavaScript and cookies are enabled.

This isn't a simple technical glitch. It's a symptom of a deep, systemic contradiction at the heart of the modern internet. On one side, we have the sprawling, legally-vetted architecture of "user consent." On the other, we have the functional reality: participation is not optional. The data reveals a system that isn't built on choice, but on compliance.

The Architecture of Consent

To understand the system, one must first examine the official documentation. Take NBCUniversal’s Cookie Notice, a document representative of the industry standard. It’s a masterclass in obfuscation through precision. The text explains, in exhaustive detail, the taxonomy of tracking technologies it deploys: HTTP cookies, Flash cookies, web beacons, embedded scripts, ETags, and software development kits. They are all bundled under the deceptively simple term “Cookies.”

The policy then outlines a hierarchy of data collection. There are "Strictly Necessary Cookies," the ones required for the site to function at a basic level. Then the categories expand rapidly: "Information Storage and Access," "Measurement and Analytics," "Personalization," "Content Selection," and, of course, "Ad Selection and Delivery." The scope is immense. These tools don't just see what you click; they apply market research to generate audiences, measure ad effectiveness, and track your browsing habits across platforms and devices.

The entire framework is presented as a series of levers and dials available to the user. The document dedicates a significant portion of its length—nearly half, by my count—to "COOKIE MANAGEMENT." It lists browser controls, analytics provider opt-outs, and links to various Digital Advertising Alliances. This is the architecture of consent: a complex, multi-layered system designed to give the impression of granular control. It suggests a user can meticulously curate their privacy settings, picking and choosing which data streams they allow.

But what happens when a user actually tries to pull the main lever—the one that shuts it all down? What is the consequence of revoking consent at the foundational level by disabling cookies or scripts in the browser itself? That’s where the official narrative collides with the operational one.

The Price of Dissent

The collision is blunt. It’s the error message: "Access to this page has been denied." The very tools that the policy spends thousands of words teaching you how to manage are, in fact, non-negotiable prerequisites for access. The system doesn't gracefully degrade; it fractures. The site doesn't simply show you non-personalized ads; it shows you nothing at all.

This transforms the dynamic from one of choice to one of coercion. The relationship is not a negotiation; it is an ultimatum. Enabling this vast tracking apparatus is the price of admission. I've analyzed countless corporate disclosures, and the gap between the stated policy of user choice and the functional reality of mandatory compliance has never been this stark. The entire consent framework is built on a faulty premise. It’s like being handed a detailed 40-page contract for entering a restaurant that outlines your right to refuse certain ingredients, but the front door is locked and only opens if you sign a waiver agreeing to eat whatever the chef serves. Is that truly a choice?

The policy documents even contain a subtle, almost buried, admission of this reality. A line near the end of the management section reads: "If you disable or remove Cookies, some parts of the Services may not function properly." This is a significant understatement. Based on the access-denied errors, it’s not that some parts won't function properly; it's that the primary function—access—is revoked entirely.

This framework creates a binary system. You are either a fully compliant, trackable data point, or you are an anomaly to be blocked. There is no middle ground for the skeptical user who wishes to engage with content while maintaining a baseline of privacy. The promise of "opting out" of interest-based advertising is a secondary concession, a token gesture. It allows you to tweak the flavor of the surveillance you’re under, but it doesn’t allow you to opt out of the surveillance itself.

So why construct this elaborate facade of control? The answer likely lies in the intersection of legal requirements and business imperatives. Regulations like GDPR and CCPA mandate that users be informed and given choices. The cookie notice fulfills the letter of that law. The business model, however, is predicated on collecting vast amounts of user data to power analytics and targeted advertising. The technical lockout enforces the spirit of the business model, even if it undermines the spirit of the regulation. It’s a system perfectly engineered to be legally defensible while remaining functionally coercive.

It's Not a Choice, It's a Toll

Let's be clear. The intricate web of cookie policies and privacy dashboards isn't a genuine effort to empower users. It's a liability shield. The data—the text of the policies cross-referenced with the behavior of the servers—points to a simple, unassailable conclusion: your data is the fee required to access the content. The illusion of choice is a carefully constructed user interface designed to secure your consent for a transaction you were never given the right to refuse. You can't opt out; you can only walk away.

-

The Business of Plasma Donation: How the Process Works and Who the Key Players Are

Theterm"plasma"suffersfromas...

-

ASML's Future: The 2026 Vision and Why Its Next Breakthrough Changes Everything

ASMLIsn'tJustaStock,It'sthe...

-

Morgan Stanley's Q3 Earnings Beat: What This Signals for Tech & the Future of Investing

It’seasytoglanceataheadline...

-

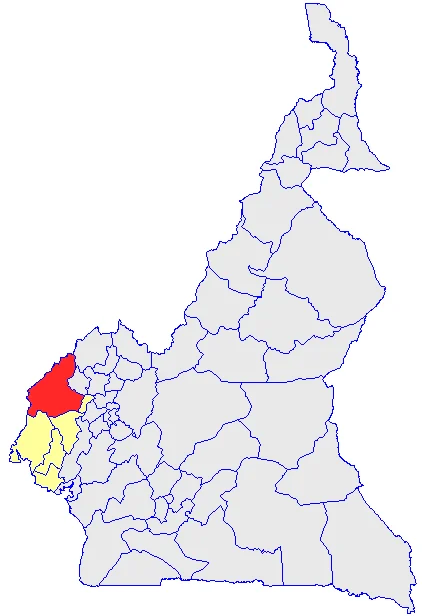

The Manyu Phenomenon: What Connects the Viral Crypto, the Tennis Star, and the Anime Legend

It’seasytodismisssportsasmer...

-

The Nebius AI Breakthrough: Why This Changes Everything

It’snotoftenthatatypo—oratl...

- Search

- Recently Published

-

- Bitcoin's Recovery: The Numbers Don't Add Up (- Reactions Only)

- Misleading Billions: The Truth About DeFi TVL - DeFi Reacts

- Secure Crypto: Unlock True Ownership - Reddit's HODL Guide

- Altcoin Season is "here.": What's *really* fueling the latest altcoin hype (and who benefits)?

- The Week's Pivotal Blockchain Moments: Unpacking the breakthroughs and their visionary future

- DeFi Token Performance Post-October Crash: What *actual* investors are doing and the brutal 2025 forecast

- Monad: A Deep Dive into its Potential and Price Trajectory – What Reddit is Saying

- MSTR Stock: Is This Bitcoin's Future on the Public Market?

- OpenAI News Today: Their 'Breakthroughs' vs. The Broken Reality

- Medicare 2026 Premium Surge: What We Know and Why It Matters

- Tag list

-

- Blockchain (11)

- Decentralization (5)

- Smart Contracts (4)

- Cryptocurrency (26)

- DeFi (5)

- Bitcoin (30)

- Trump (5)

- Ethereum (8)

- Pudgy Penguins (5)

- NFT (5)

- Solana (5)

- cryptocurrency (6)

- XRP (3)

- Airdrop (3)

- MicroStrategy (3)

- Plasma (4)

- Zcash (7)

- Aster (10)

- qs stock (3)

- mstr stock (3)

- asts stock (3)

- investment advisor (4)

- morgan stanley (3)

- ChainOpera AI (4)

- federal reserve news today (4)